Plants and animals can be identified by select iPhone models running iOS 15 or later in an impressive demonstration of Apple's Visual Lookup feature, which allows users to learn more about famous landmarks, art, plants, flowers and pets. The feature was introduced alongside Live Text as part of an iOS 15 release that focused on increased actions within photos using on-device intelligence. Due to the computing required on the iPhone, it makes sense that only specific iPhone models can take advantage of this feature. By keeping as much computing on-device, Apple can protect user privacy by keeping it off the company's servers altogether.

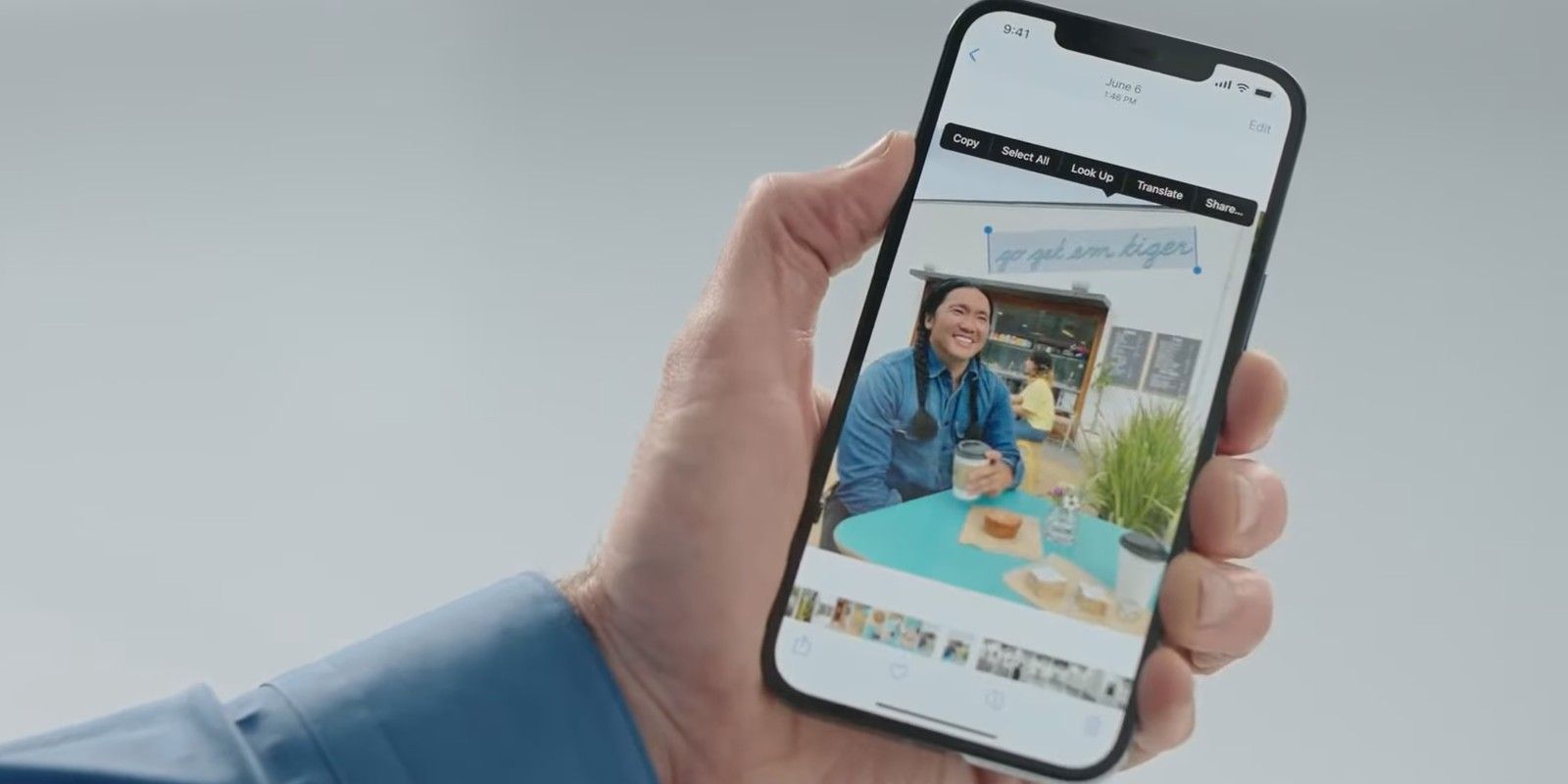

Both the Live Text and Visual Lookup features scan objects within a photo saved to the device's camera roll. Their functions are different. While Visual Lookup aims to provide information about a subject — perhaps to identify it or add more background information — Live Text extracts data from a photo. Users can view the text in an image that is compatible with Live Text, which will likely be found in prominent signs or captured documents. Live Text can be copied, searched on the web, or shared with other people and applications. But for unidentifiable plants and animals within a photo, Visual Lookup can fill in the gaps and add more information about flora and fauna captured on the iPhone.

Visual Lookup scans the subjects and other items within a photo and tries to provide more information about its contents. The feature seems powerful, but its scope is limited by the devices and regions it is compatible with. Visual Lookup is currently limited to the United States, and it is unclear whether it will make its way to other countries or regions in the future. Similarly, it only works with specific languages. It is also limited to the iPhone XS, XR and later — both of which were released in 2018. However, phone compatibility is not likely to be an issue, as Visual Lookup works on the last four generations of iPhones.

New Features & How To Use

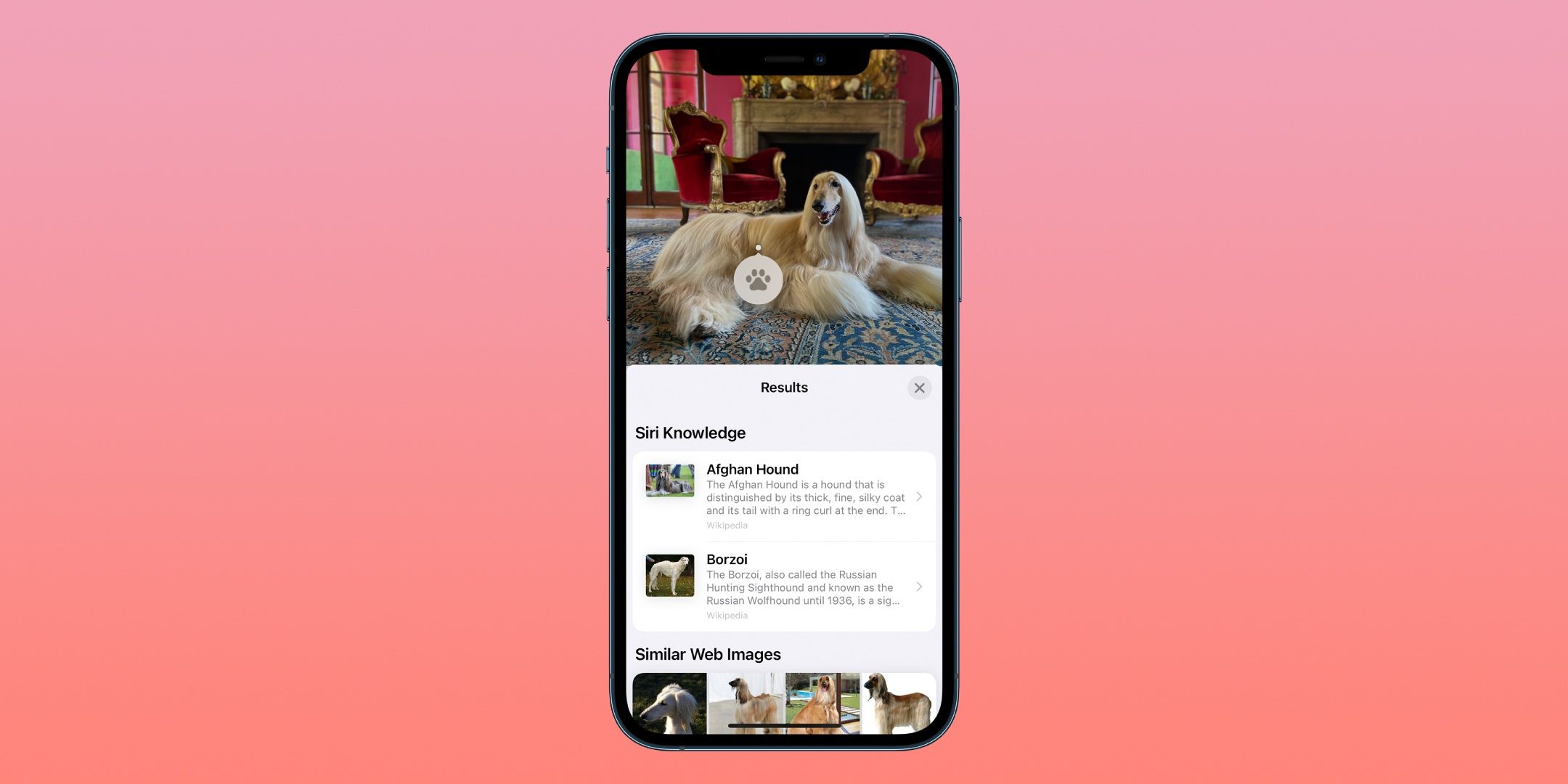

To see if a photo has elements that can be detected by Visual Lookup, open it in full screen on the Photos app. Every photo saved in the Photos app will have an 'i' button located at the bottom of the screen when it is opened. However, when a photo has detectable elements with Visual Lookup, the 'i' button will be partially covered by two floating star icons. Tap this icon to bring up the photo details, which offers the option to add a caption and can show lens information used to take the photo. For example, when a plant or animal is detected, a 'Look Up — Plant' or 'Look Up — Animal' will be displayed directly underneath the caption bar. Tap these buttons to bring up Siri's findings related to the subjects, including the plant or animal name, photos, scientific name and other information.

Though the current Visual Lookup features are available on iOS 15 versions, it is slated to receive improvements as part of the upcoming iOS 16 release in the fall. With the new version of Visual Lookup, users can copy and paste the subject of a photo for use in other applications. It is also possible to drag and drop the subject of a photo to other applications, such as a Messages field or in a document. On beta versions of iOS 16, the implementation works well but sometimes misses a part of the subject in the cutout. The feature will likely be refined as iOS 16 continues development and is expected to see a public release in Fall 2022.

Source: Apple, Apple Support