Twitter might soon be a little less toxic for many users, thanks to the new Safety mode the company plans to add. Twitter and many other social media services have been under a more intense spotlight recently, over concerns that not enough is being done to improve moderation. Due to this, many services have looked to additional features to create a better user experience, with Safety mode the latest example of Twitter's efforts.

Social media services have found themselves the topic of many conversations in recent years. Whether it is accusations of political bias, facilitating the spread of misinformation, promoting dangerous trends, or claims of anti-competitive practices, most of the big-name services have been criticized to some degree. In spite of the increasing concerns and questions, social media services continue to expand while newer ones arrive and attempt to make their mark on the industry.

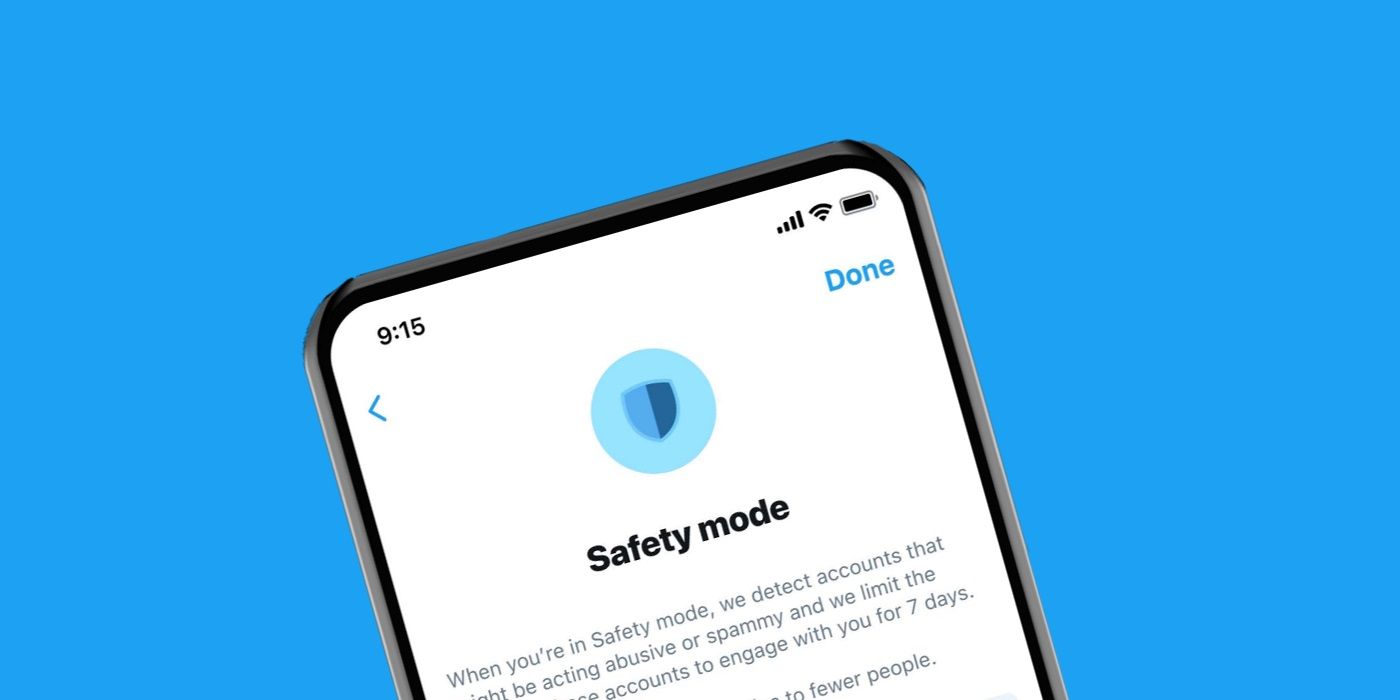

Twitter is no exception and as part of its Analyst Day, the company discussed many new features that it is currently exploring. One of them is a new Safety mode which Twitter describes as a “smarter tool” that can “proactively help” protect users. Although the company somewhat announced the feature today, Twitter did not provide any details on when users of the micro-blogging platform can expect Safety mode to become available.

How Safety Mode Keeps Twitter Users Safe

Essentially, Safety mode is just that. A mode that once enabled creates a safe space for users that others cannot as easily enter as they could've otherwise. Once Safety mode is toggled on, Twitter uses its algorithms and other smarts to detect accounts that it considers to be “abusive and spam.” These detected accounts are then automatically stopped from being able to interact with the Safety mode user. However, this is not an always-on permeant solution, as the safety mode only lasts for seven days. At which point, the user would likely need to re-enable it again to continue to remain protected in the same way. In addition, Twitter also plans to warn users when their Tweets are receiving abusive or spam replies. Presumably, this will act as a prompt to suggest to the user that they might want to enable Safety mode before the replies get worse.

Although the prompt acts as a preemptive measure, the response still requires the user to enable their own safety mode instead of Twitter creating one to begin with, or dealing with the abusive and spam accounts itself. Twitter recently announced a new Birdwatch program that is also based on the same premise, as it requires users to almost self-regulate the platform themselves. While it is good that Twitter is thinking about changes like these, and looking at thoughtful ways to implement them, it is becoming increasingly clear that Twitter is basing a lot of its moderation efforts on requiring action from the user, making them somewhat less proactive than the company might suggest.

Source: Twitter