TikTok is putting a new moderation system in place that automates the process of removing content that breaches its platform policies and is also introducing a revamped violation alert system too. The wildly popular social media app has lately drawn criticism over its flawed content moderation practices, and occasionally for gaffes that have happened as a result of a computing mistake.

Earlier this month, TikTok claimed to have erroneously banned the intersex hashtag and drew a considerable amount of criticism over it. The app has also previously blocked hashtags that led to the discovery of fake COVID-19 tests videos, but failed to remove the misleading media that depicted the dangerous deed in action. Last year, something similar happened when TikTok banned QAnon hashtags, but the videos flaunting them remained live on the platform for quite some time.

In a bid to make the content moderation process faster and more effective, TikTok is now automating the process of removing videos that violate its content guidelines. So far, a computer-based system could only flag a video for potentially harmful content, while its team of human moderators were responsible for the review and, if deemed necessary, removal of the video. The automatic content removal system will go into effect next week, and it will look for markers such as sexual activity or nudity, violent or graphic content, as well as illegal activities and regulated goods for the punitive action.

A Step In The Right Direction, But A Long Way To Go

TikTok notes that its automatic content removal system is not perfect, but it will keep improving over time. To ensure that incorrect removals are kept in check, content creators will be able to file an appeal that will involve a human moderator handling the situation going forward. Plus, TikTok will continue to allow creators, as well as its entire community, to report a video for potential policy violations. Of course, the automatic moderation system will have to overcome hurdles, such as an AI bias down the road, but it’s a good start. Part of the plan here is that the automatic content removal system will reduce the stress of moderators who are exposed to disturbing content on a daily basis. If the history of Facebook and Twitter is anything to go by, however, TikTok has a long way to go before its automatic content removal system can be called effective.

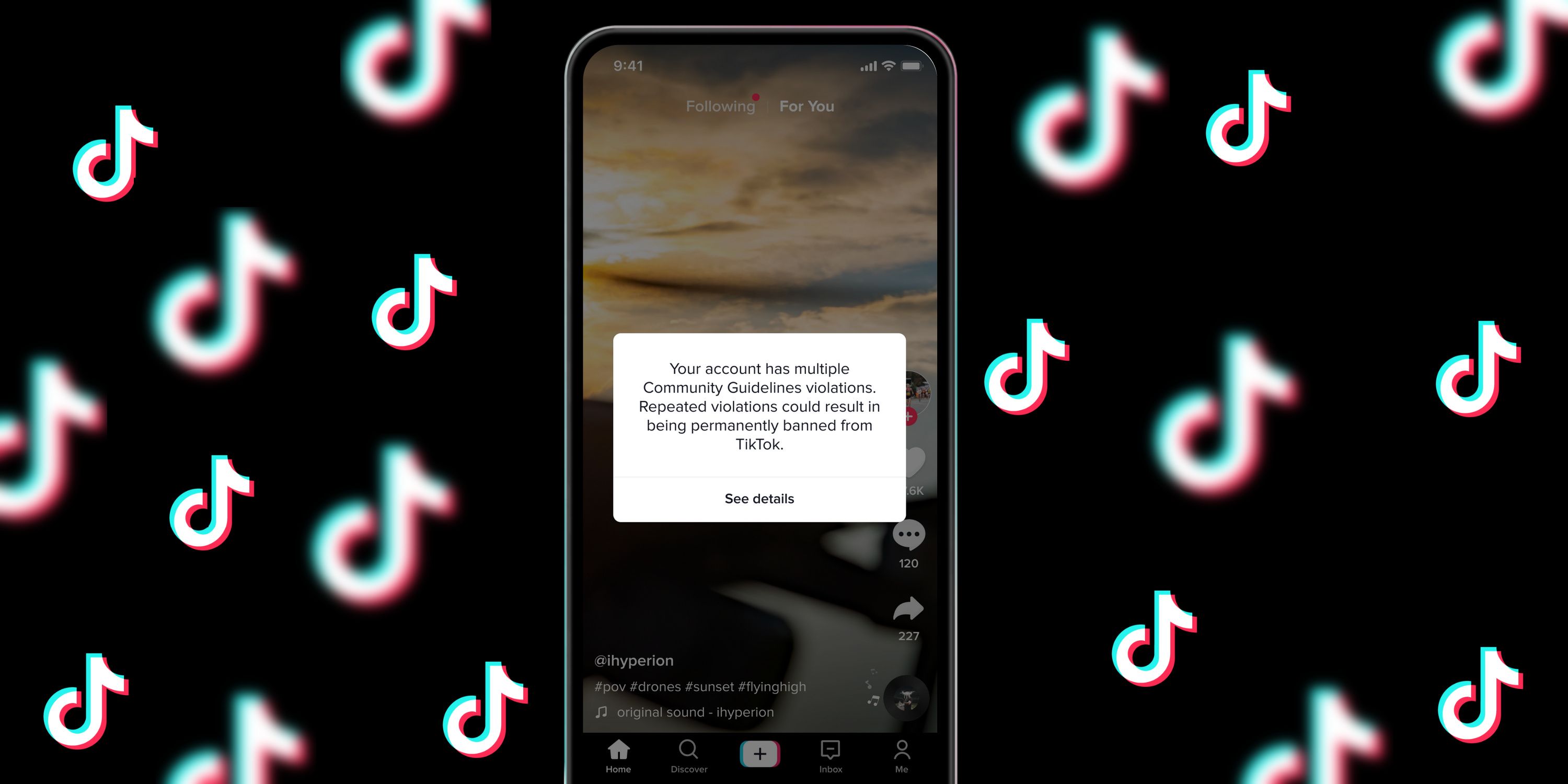

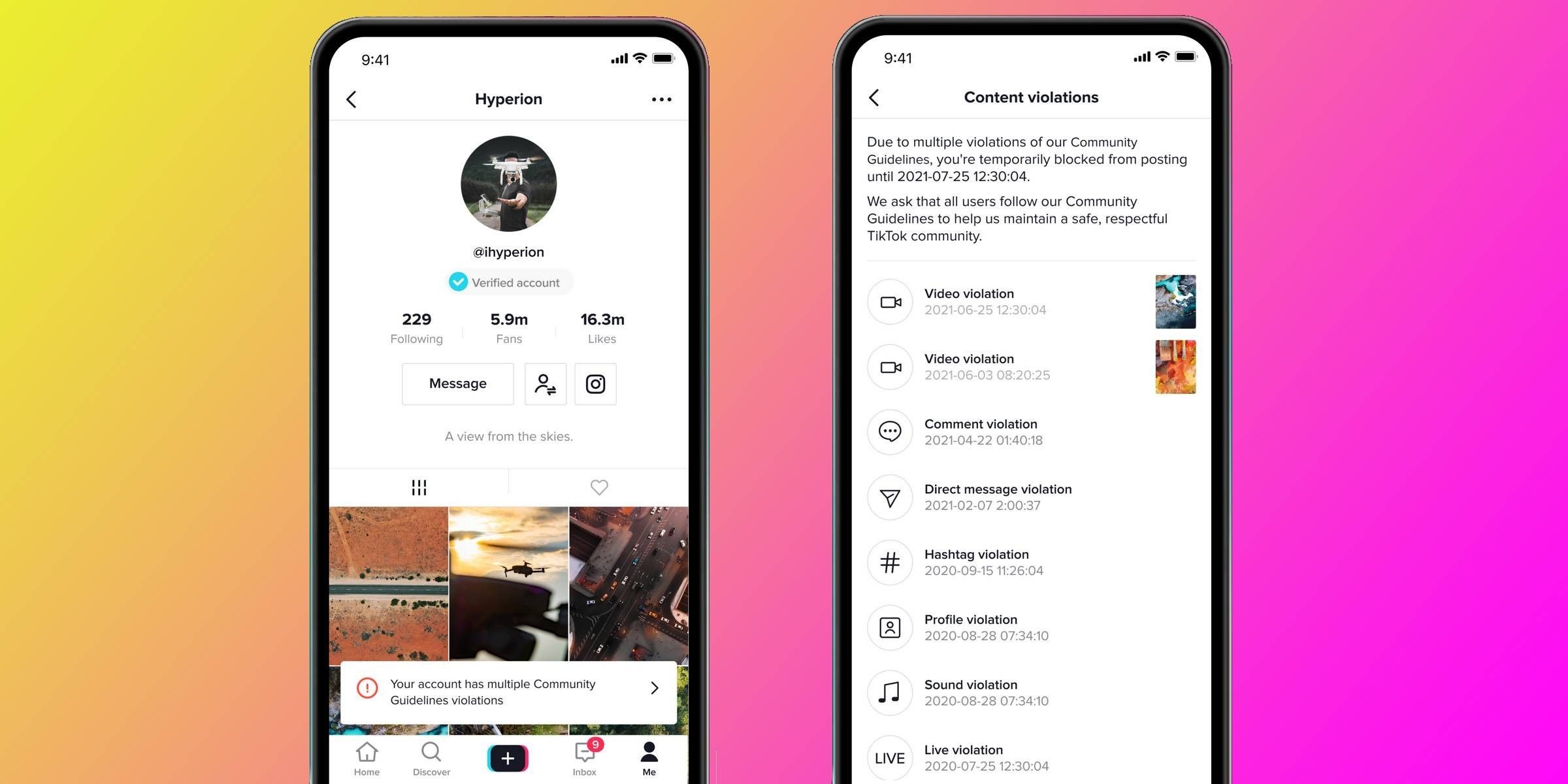

While the efficiency of TikTok’s automatic video removal system remains to be seen, the company is also changing how creators — and its user base, in general — are informed about policy violations on their account. TikTok will notify users about such reports in the Accounts Update section of the inbox, neatly categorized into sections like videos, audio, or comments, depending on the content rules that were overstepped. The first report of violating guidelines will arrive as an in-app warning, while subsequent acts of defiance will result in punitive actions, such as the inability to upload content for up to 48 hours. A string of similar behavior will first result in the warning of an impending ban, and if no course correction happens, TikTok will permanently ban the account. However, just like objectionable content, creators will have the opportunity to file a review if they think their TikTok account has been incorrectly restricted or suspended.

Source: TikTok