During Tesla's AI Day event, the company described the incredible lengths it has gone to build Dojo, its exaflop class supercomputer, and what it will be capable of in terms of raw data processing power. This is quite an impressive feat and will immediately place Tesla in the upper stratosphere right alongside, and possibly ahead of, the most powerful computers in the world. Given that Tesla is an automaker and not a computer manufacturer, some might be wondering why this was done and what Dojo will do for its electric vehicles.

Current supercomputers reach 500 petaflops and upcoming systems are expected to reach 1 exaflop matching Tesla’s Dojo. Each flop represents one floating point operation, for example, multiplying two numbers together with high precision. The prefixes more commonly known in computing are 'mega,' 'giga,' and 'tera,' usually preceding the ending 'byte' and signifying a million, a billion, and a trillion bytes of data. A petaflop is equal to a thousand teraflops and an exaflop is the largest, equivalent to a million teraflops. The performance of these computers is mind-boggling and somewhat impossible to imagine beyond relative terms. Tesla's Dojo, when complete, might be the fastest computer in the world for artificial intelligence training.

Tesla says it designed its own supercomputer as it found current solutions insufficient for the processing power and volume of data required to advance its Full Self-Driving (FSD) training needs. Many will be familiar with "dojo" as the name of a martial arts training studio, and the Dojo supercomputer detailed during Tesla's livestream is how Tesla will train its artificial intelligence (AI) models to understand various objects and situations that might be encountered on the road. Since the hardest situations to prepare a self-driving system for are rare and unpredictable events, waiting to collect enough real-world data for training is too slow. Tesla captures the few examples that exist and uses them in simulations that closely mimic reality, even creating realistic renders with ray tracing, shadows, day or night lighting, and the strobe of emergency vehicle lights, so the FSD computer model can run through vastly more iterations and learn situations better and faster. This requires massive processing power and a huge model, which is too demanding for most computer systems. Tesla has brought FSD to a usable level despite this challenge, but it needs Dojo to push it further.

Tesla's Impressive Dojo Design

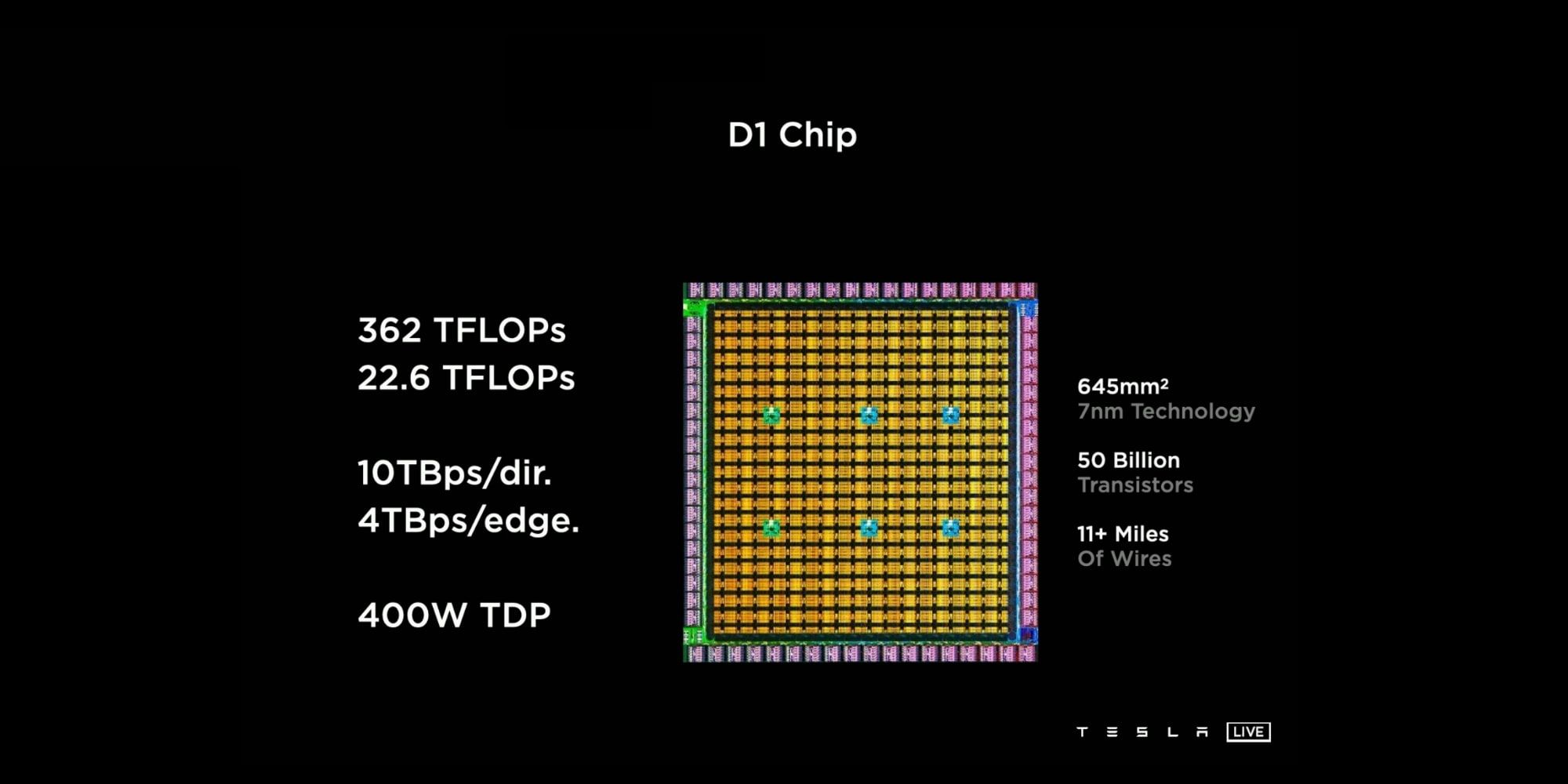

The D1 chip, which is the muscle behind the Dojo supercomputer, was designed first for maximum bandwidth, laying in communication channels before the floating point multipliers and other neural processing components, making certain that information could move as quickly as possible. This high-performance chip is produced using TSMC's 7-nanometer manufacturing process, resulting in high efficiency. Even so, this 362 teraflop chip containing 50 billion transistors is made with a 400-watt thermal design, meaning it draws quite a bit of power which generates a large amount of heat. Twenty-five of these D1 chips are laid out in a five-by-five grid to create a training tile with 9 petaflops of performance, drawing 18,000 amps of power and outputting 15 kilowatts of heat.

That isn't the end of the story either. Tesla claims each training tile is self-contained and modular, such that multiple tiles can be layered, with 120 combined, enabling the 1.1 exaflop capability. Known as an ExaPOD, this computing cabinet can be virtually partitioned into Dojo processing units (DPU) to perform tasks of any size. Tesla claims four times the performance and about one quarter less energy when compared with current systems. With this amount of AI processing power, Tesla's FSD system will be able to improve more rapidly and handle unusual driving scenarios more gracefully.

Source: Tesla/YouTube