A new experimental system has allowed a robotic arm to map its own physical form and movement. Most robots lack any sense of self – speaking physically, this means they lack the ability to intrinsically 'know' things about their own bodies. Machines don't inherently know what they are, where they are, or what any part of them is doing. They act out preprogrammed instructions without any regard for context.

Lack of physical awareness has led to many robotics hurdles over the years. It's documented that robots with walking legs and grabbing hands have been notoriously difficult to build for many reasons, and the robot's lack of physical awareness is a contributing factor. This can lead to scenarios such as a robot with gripping hands crushing or dropping objects because it has no context for what it is holding or how tightly to squeeze it, or a legged robot falling over and continuing to move its legs to follow its walking instructions.

Scientists at Columbia University have tested to see how this limitation can be improved upon. An experiment was performed where an AI-controlled robotic arm was allowed to view itself through five cameras simultaneously as it freely moved. The AI tested the range of its motion and tracked the way the parts of the arm would move while image mapping the process to form a self-model of the entire arm. The final model allowed the AI to predict and understand the arm's motion to a 1 percent error, something that would allow it a greater ability to move on its own without the need for constant feedback and control.

The Purpose Of A Self-Model

Humans and other creatures with physical awareness have an innate ability to understand where their limbs are and what they're doing due to acquiring a self-model naturally at a young age. The self-model lets them keep track of their bodies even when the creature is not directly perceiving their own body, even when asleep. This ability could make robots better able to move through 3D space without damaging themselves or their surroundings, better able to manipulate limbs, and even able to identify malfunctions on their own when a problem prevents them from moving according to what their model predicted.

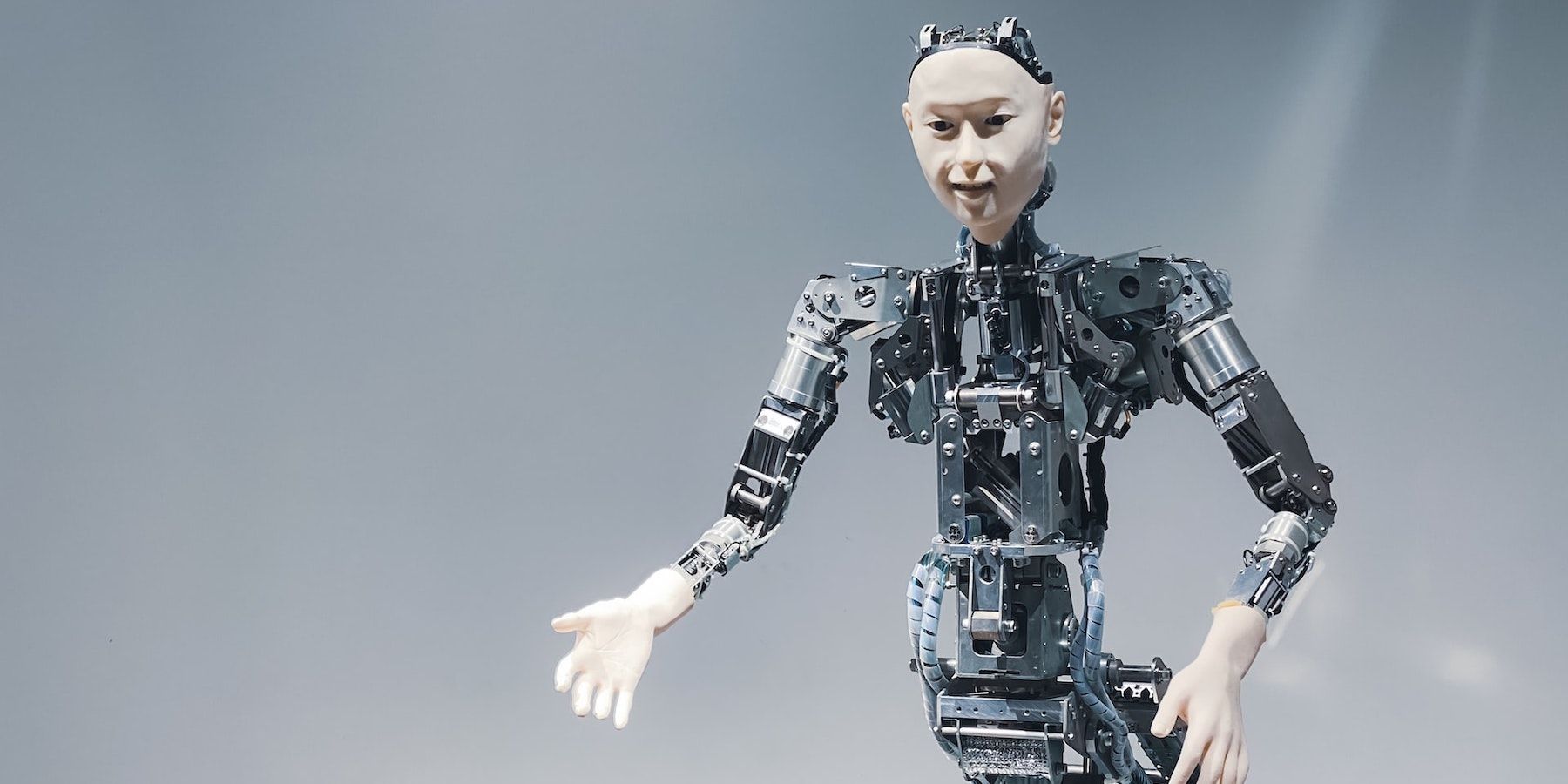

Giving robots a self-model for physical movement looks to be a positive development and possibly a necessary one to eventually develop freely moving humanoid machines. The idea does have skeptics, as physical awareness is somewhat of a precursor to self-awareness, something many have wanted to avoid giving machines. As such these experiments are only testing the benefits of giving a machine a physical self-model and not a mental one, and its applications seem promising for applications are broad as robotics, self-driving cars, and more.

Source: Columbia University