Meta claims its new open-source AI model, ImageBind, is a step toward systems that better mimic the way humans learn, drawing connections between multiple types of data at once similarly to how humans rely on multiple senses. Mainstream interest in generative AI has exploded in recent years with the rise of text-to-image generators like OpenAI's DALL-E and conversational models like ChatGPT. These systems are trained using massive datasets of a certain type of material, like images or text, so they can ultimately learn to produce their own.

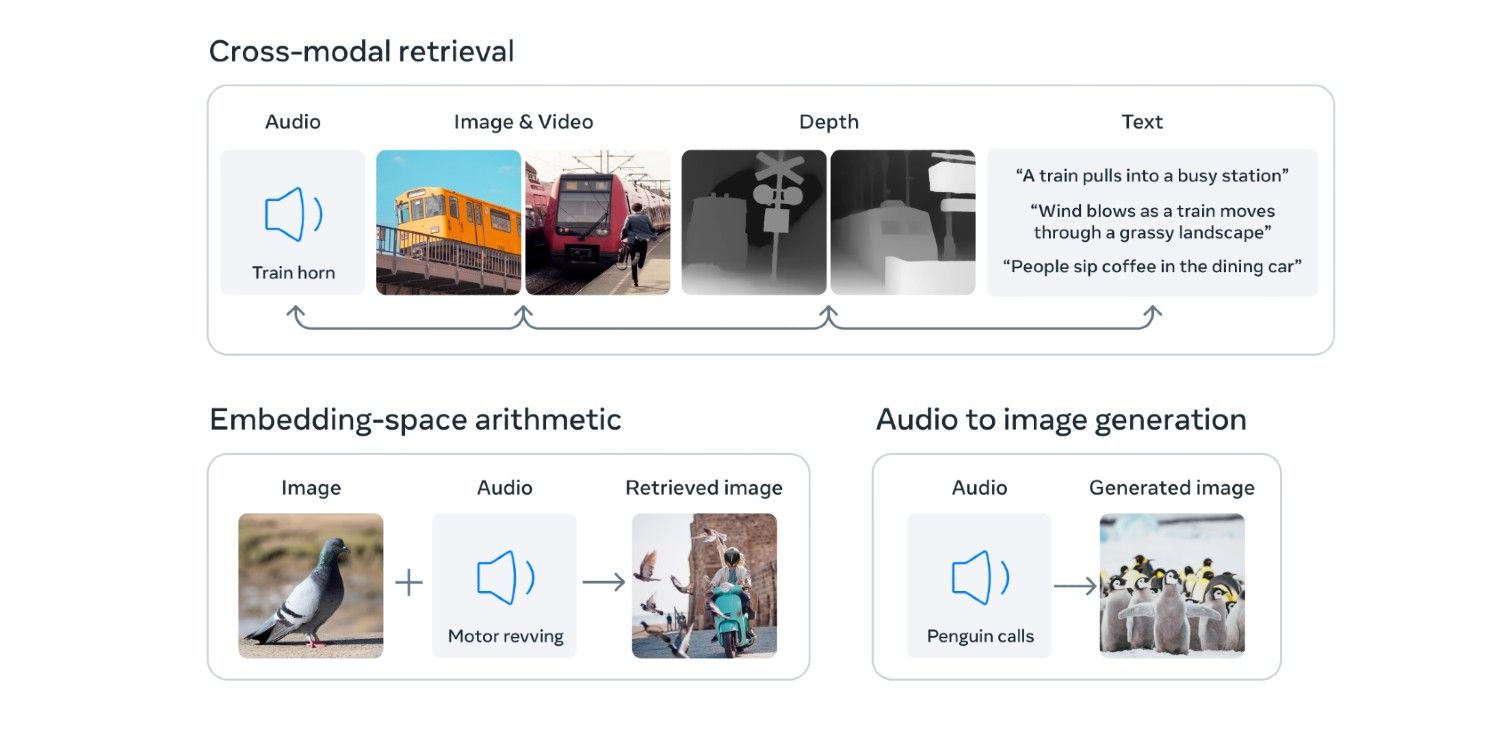

With ImageBind, Meta aims to facilitate the development of AI models that can grasp the bigger picture. Taking a more “holistic” approach to machine learning, it can link six different types of data: text, visual (image/video), audio, depth, temperature, and movement. The ability to draw connections between more types of data allows the AI model to take on more complex tasks — and produce more complex results. ImageBind could be used to generate visuals based on audio clips and vice versa, according to Meta, or add in environmental elements for a more immersive experience.

What Makes Meta's ImageBind Different

According to Meta, “ImageBind equips machines with a holistic understanding that connects objects in a photo with how they will sound, their 3D shape, how warm or cold they are, and how they move.” Current AI models have a more limited scope. They can learn, for example, to spot patterns in image datasets to in turn generate original images from text prompts, but what Meta envisions goes much further.

Static images could be turned into animated scenes using audio prompts, Meta says, or the model could be used as “a rich way to explore memories” by allowing a person to search for their messages and media libraries for specific events or conversations using text, audio, and image prompts. It could take something like mixed-reality to a new level. Future versions could bring in even more types of data to push its capabilities further, like “touch, speech, smell, and brain fMRI signals” to “enable richer human-centric AI models.”

ImageBind is still in its infancy, though, and the Meta researchers are inviting others to explore the open-source AI model and build on it. The team has published a paper alongside the blog post detailing the research, and the code is available on GitHub.