Apple announced some exciting changes to iOS, the operating system of the iPhone, at its 2021 Worldwide Developers Conference. One of the most useful features added allows the iPhone to understand text in photos and this integrates with Apple's rich results capability, making it easier and quicker to call phone numbers, send emails, and more simply by tapping the highlighted text.

Apple Photos is where every photo and video recorded is stored. It opens when tapping the thumbnail image in the Camera app and integrates with iCloud to securely back up media online. The Photos app also includes several filters and adjustment controls for both photos and videos with live previews of the effect even on 60-frames-per-second 4K-resolution videos. Live Photo effects are somewhat hidden requiring a swipe up to reveal looping and long exposure effects. Apple Photos keeps expanding with more features and improvements each year and more are coming later this year with iOS 15.

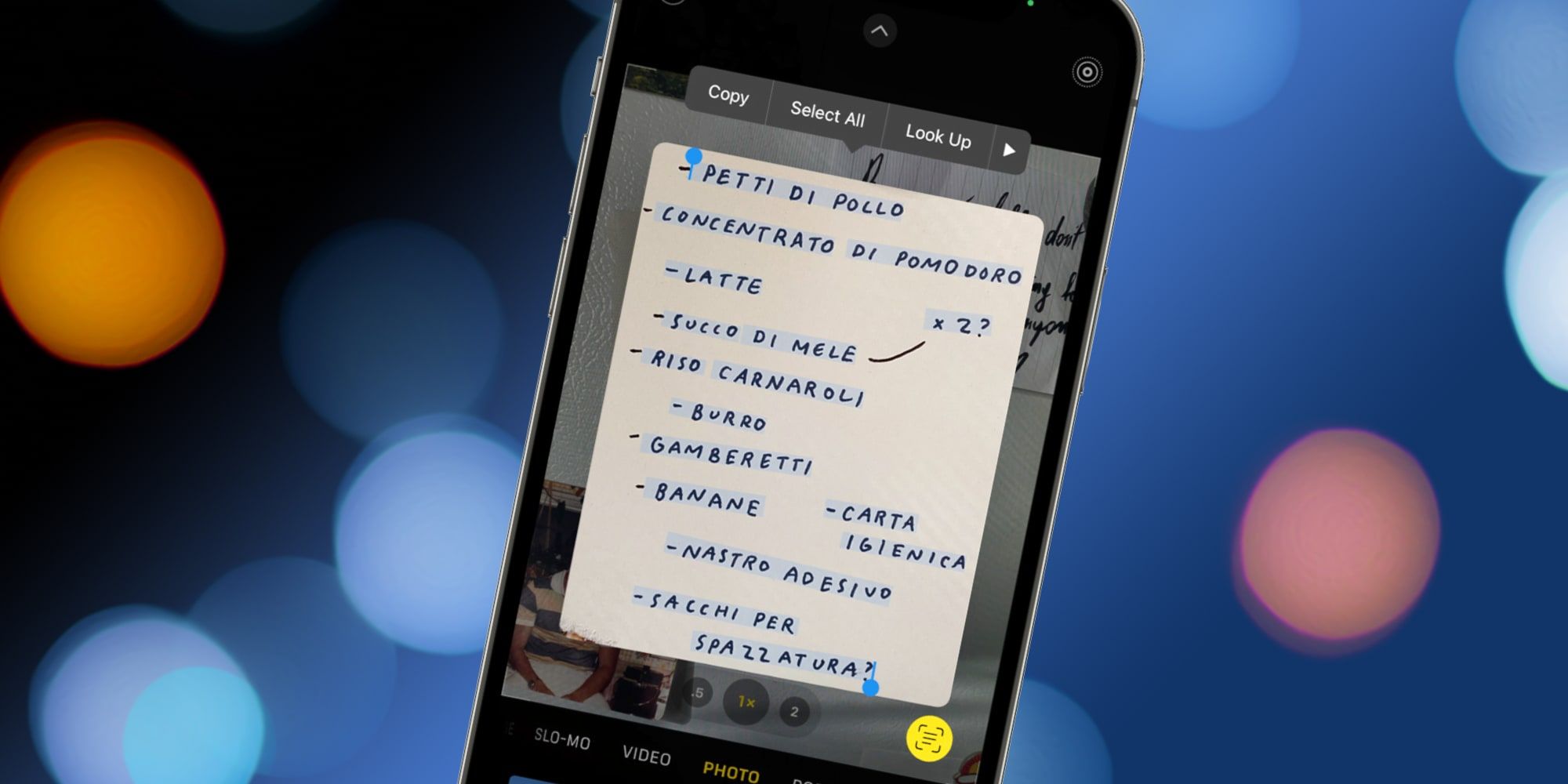

Apple is providing the iPhone the ability to read and understand text in photos with the upcoming changes coming to iOS 15. Android users have been enjoying something similar with Google Photos for several years, so it's nice to see Apple catching up with its Photos app. Called Live Text, this feature uses optical character recognition (OCR) on photos in the library and makes any text that is found accessible with a tap. The text is also searchable, meaning a Spotlight search of the iPhone will bring up Photos that contain matching text as well as app, document, and web results.

Using iPhone's Live Text Feature

When iOS 15 arrives later this year, the iPhone will have the ability to find and use text that appears in photos. Apple will treat any text as a rich result, meaning phone numbers can be tapped to start a call. Tapping an email address will open the default mail app, as well, and contacts will be recognized. Blocks of text in a photo can be selected, copied, and pasted across any Apple device using Continuity. Live Text can also understand seven languages, English, Chinese, French, Italian, German, Spanish, and Portuguese, each providing the same tappable actions.

The Photos app is also gaining improved object recognition, allowing a Spotlight search to bring up matching photos in the results list. Apple, of course, pointed out that it uses on-device artificial intelligence to enable these advanced photo and text recognition abilities, which keeps that information secure and private. Google had many of these features available on Android before Apple, but Apple typically handles usability and privacy better. Apple's Live Text, coming with iOS 15, will make it much easier to capture text in the real world and use it on the iPhone.

Source: Apple