Facebook claims to have achieved a breakthrough with a new system that not only detects deepfakes, but can also reverse engineer the manipulated media to find the AI engine used to create it, eventually even helping to trace the bad actor behind it. Deepfakes have emerged as a sophisticated method of spreading misinformation and can manifest in multiple forms, such as fake slanderous pictures to fabricated adult videos. However, that’s just the tip of the iceberg. The harmful implications are endless and worrying enough that even the FBI has warned about the potential of deepfakes to be weaponized for foreign influence operations. Deepfakes have been used with amusing results, but the scope of misusing them is vast.

Work is underway in the research community to combat the menace of deepfakes created with malicious intent, and tech giants are lending a hand. Microsoft, for example, has developed an AI-based Microsoft Video Authenticator tool to detect deepfakes. Last year, Facebook started removing manipulated media from its platform that could be misleading and sway people’s opinions ahead of the presidential elections. Now, the social media behemoth is stepping up its game against this threat.

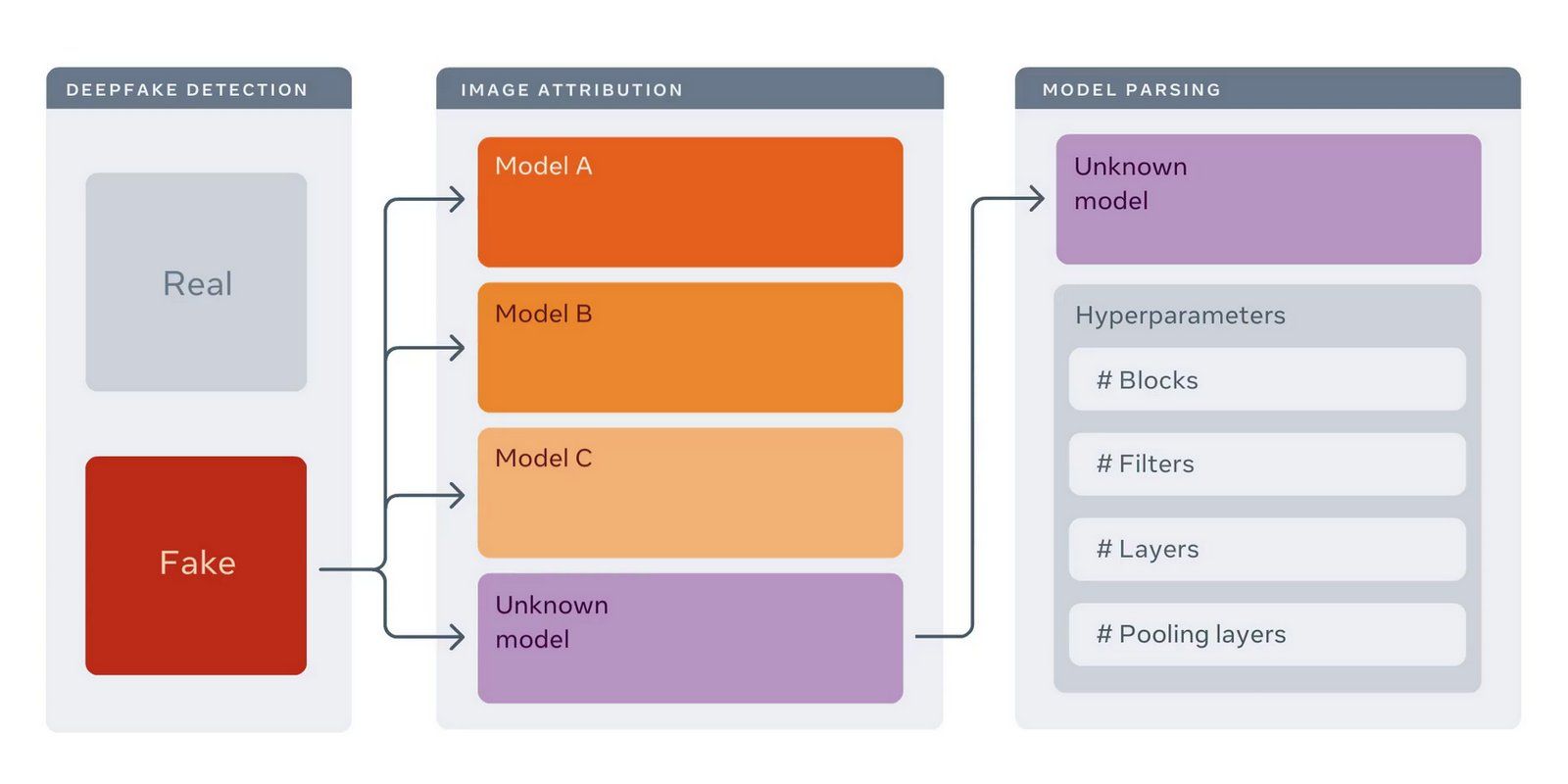

Created in collaboration with Michigan State University (MSU) researchers, Facebook’s AI-based system identifies manipulated media and then employs reverse engineering to detect the generative model that was used to produce the deepfake. The ultimate goal is to trace the deepfake generating software to a computer, and with that, the culprit behind it. Facebook notes that its model will essentially look for unique fingerprints left by a generative model in a sea of manipulated media, and then identify the point of origin.

How Facebook Is Tracing Deepfake Sources

Facebook is following in the footsteps of forensic experts who look for distinct patterns in images to identify the camera model used to click a particular set of pictures. In this case, the method developed by Facebook and MSU researchers will look for unique attributes left on manipulated media by the generative model behind it. Factors such as fingerprint magnitude, repetitive nature, frequency range, and symmetrical frequency response are used to create a complete fingerprint profile that is subsequently used for detecting the generative models’ hyperparameters. Researchers equate these hyperparameters to engine parts, while the generative model is likened to a car that uses these engine parts. A couple of years ago, Google released a vast dataset of deepfake videos to aid research in the field as well.

Facebook says the model parsing system it employs is more efficient when it comes to real-world deployment of the technology by law enforcement authorities and cybersecurity experts. The new method is said to be especially useful in scenarios where a trove of manipulated media is the only resource at the disposal of experts and authorities. The end goal is to develop better investigative tools that can be relied upon to tackle large-scale disinformation campaigns using deepfakes.

With the technology behind deepfakes evolving at a rapid pace, solutions like the one Facebook has now announced are all the more important. Facebook says that the new system will be open-sourced for the research community, with MSU releasing the data set, code, and trained models, so that others can help to further develop more efficient deepfake tracing methods.

Source: Facebook