Apple recently announced its new Live Text feature for iPhone, which is remarkably similar to Google Lens. While Lens has been in active development for several years now, Apple's solution is currently in developer beta testing and won't officially launch until the iOS 15 update arrives later this year. Mac computers and laptops that can run macOS Monterey and any iPad that is compatible with iPadOS 15 will be receiving these same text and object recognition features.

Google Lens first appeared with the company's Pixel 2 smartphone in 2017. Text recognition software existed long before that, but with Google's implementation, this capability was combined with Google Assistant and the company's search engine skills. Actions based on the text found in photographs and live camera views are more powerful than simply extracting the text from a photo as previous OCR (optical character recognition) software did. Object recognition adds another layer of complexity, identifying people and animals for use in is online image storage service, Google Photos. Apple enhanced its Photos app with similar object recognition for the purpose of organizing images, but there was no real challenge to Google Lens until Live Text was announced.

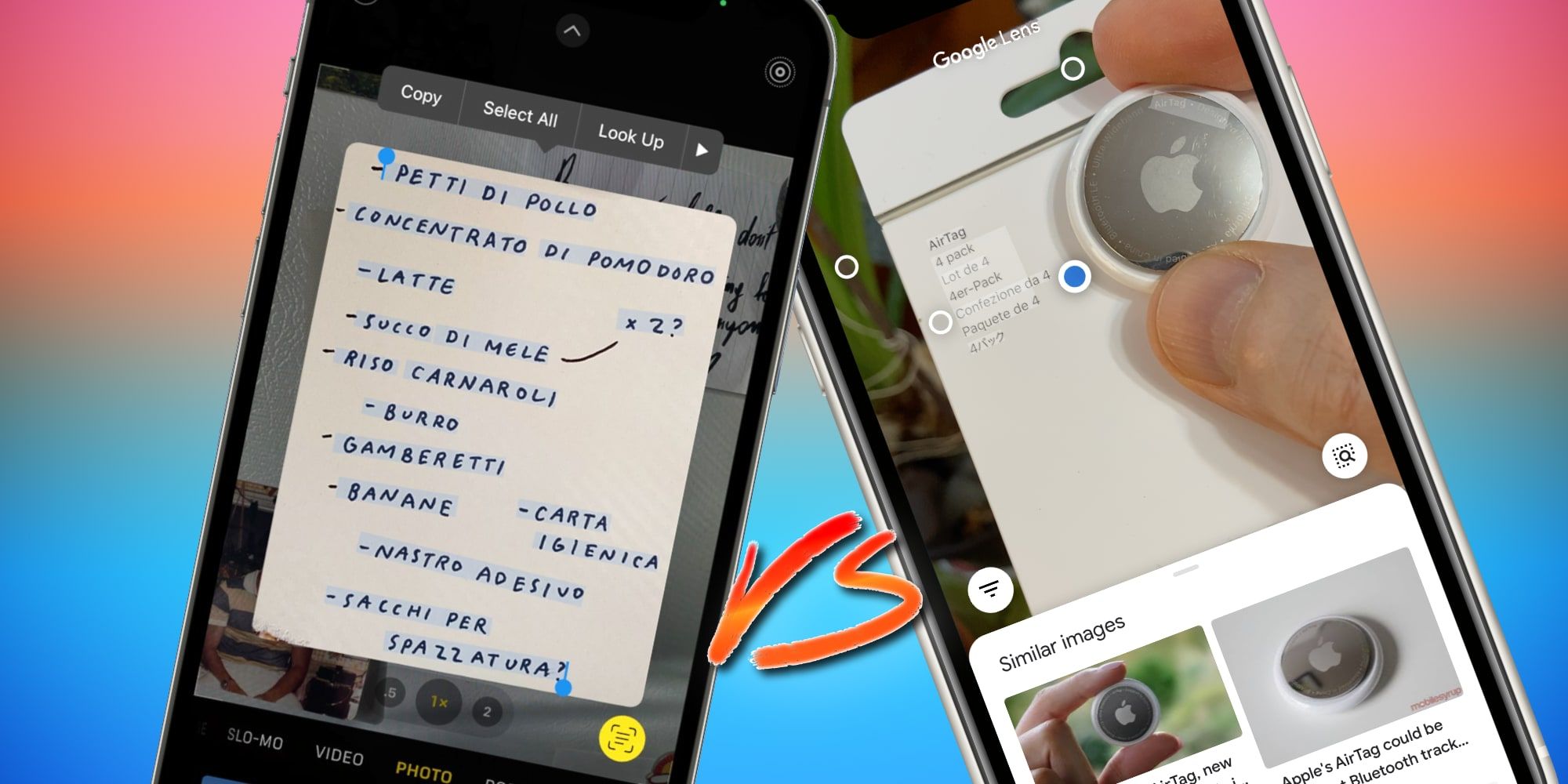

With the upcoming iOS 15 upgrade, Apple's Live Text instantly identifies any text in a photo, making every word, letter, number, and symbol selectable, searchable, and available for various actions. For example, any portion or all of the words in a photo can be translated, email addresses and phone numbers become links that launch the Mail and Phone apps when tapping or clicking them. Spotlight search will also be able to find photos based on the text and objects in the images, making recovering information or locating a particular photo much easier for those with large libraries. These are very exciting and useful additions to Apple devices but, in truth, iPhone and iPad users have had access to similar capabilities since 2017 when Google added Lens to its iOS search app. Google Photos is also available on Apple devices, meaning searching for text and objects works there also. The comparison of features is very close and Apple still has several months before the official launch. For owners of compatible Apple devices, the Live Text features will provide capabilities that have become common on many Android phones.

Live Text Versus Google Lens: Compatibility & Privacy

Apple’s new Live Text and Visual Lookup features require a relatively new iPhone or iPad with an A12 processor or newer. For Mac computers, only the newest models that use Apple's M1 chip are compatible. The Intel-based computers, even those less than a year old will not have access to Live Text. Meanwhile, Google Lens and Google Photos work their magic on a much wider variety of mobile devices and computers. Lens was initially only available on Pixel phones, then expanded to a few other Android phones and Chrome OS devices. However, most Android devices gained access via Photos. Through a browser, Windows, Mac, and Linux computers can use Google's robust set of image recognition features, with Lens showing as an option when text is recognized in a photo. This means Google Lens is available for almost every recent device from any manufacturer. Google has much more experience with image recognition, having launched Lens several years before Apple’s Live Text. This also means Google Lens will likely be more accurate and have more features overall. Since Live Text hasn’t been made available to the public yet, more features may be added and compatibility might grow over time, just as it did with Google Lens.

The reason Apple only made Live Text available to recent in-house processors is because of its dedication to privacy. While Google Lens requires an active internet connection, Apple's Live Text and Visual Lookup do not, with the processing using the AI capabilities of the chips built into an iPhone, iPad, or M1 Mac. This might bring better performance versus Google when using a slow internet connection. Apple could have written the code to execute Live Text on Intel processors since a Mac Pro offers much higher performance than an M1 Mac. However, Apple is transitioning away from Intel and is less likely to write complicated algorithms that rely on specialized hardware for those older systems. This is bad news for those that purchased an Intel Mac last year, but isn't really a surprise. For Apple devices that aren't compatible, Google has a very good solution for text recognition, translation and more, when using Google Photos and the Google search mobile app. For owners of the M1 Mac and recent iPhone models, Apple's Live Text provides a privacy-focused answer that integrates better with Apple's Photos app and the entire Apple ecosystem.