Apple is making a key change to its Child Sexual Abuse Material (CSAM) protection tool in the Messages app, choosing to disable parental alerts when kids aged below 13 years of age send or receive sexually explicit images. The tool was detailed earlier this year as a means to protect young users from the predatory behavior of bad actors they might get in touch with. The announcement was met with mixed reactions, as experts worried that Apple is sacrificing privacy by scanning what images users share in its Messages app.

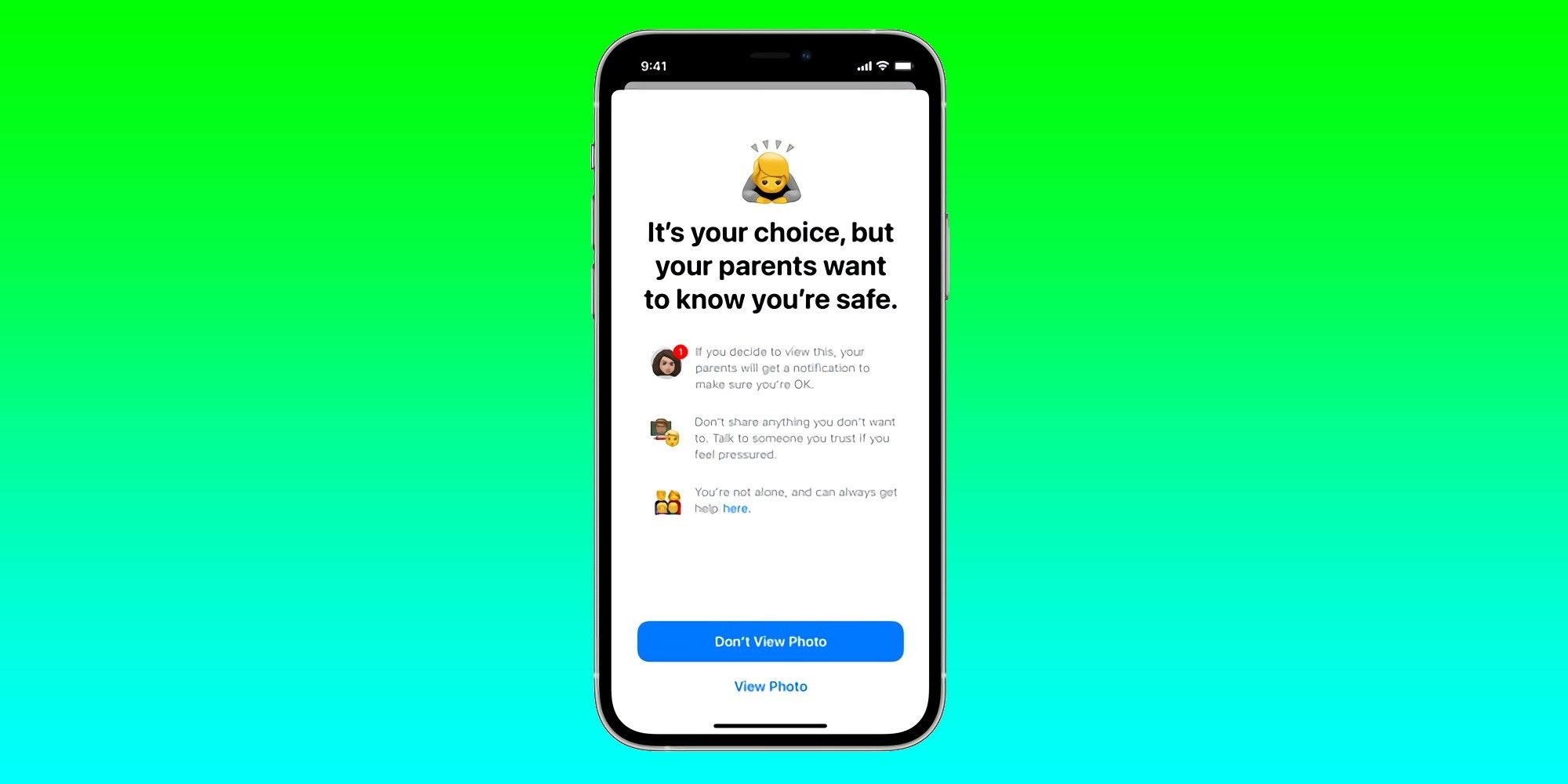

To recall, Apple has put in place end-to-end encryption as a security protocol in Messages, which means only the sender and recipient can read the texts exchanged between. Not even Apple can intercept and read the conversation. The CSAM protection feature in Messages has to be enabled by parents for kids under the age of 18 using the Family Sharing system. Once activated, an AI algorithm will kick into action and will automatically blur every image it deems explicit. The system then shows a warning message describing the risks of viewing such an image, which users can choose to ignore or abide by.

As per a report by The Wall Street Journal, the system will continue to work as described, with one key exception. Earlier, the CSAM defense in Messages was supposed to alert parents if young iPhone users under the age of 13 years chose to view a problematic image despite being warned. However, Apple has now decided to lift the parental warning part, which means viewing a sexually explicit image will not send a notification to parents. The change will be implemented following the rollout of iOS 15.2, which introduces CSAM protection in the Messages app. To recall, Apple had to delay the CSAM features for Messages and iCloud photo scanning after intense backlash from privacy experts.

Taking The Right Steps, But Concerns Remain

Apple appears to have made the move following backlash from activists and child wellness experts who voiced that alerting parents can be risky for queer children. For young users who live in a household where one or both parents are unsupportive of their gender identity, the risks of abuse against children are high. However, Apple is not pulling the plugs on the feature as a whole, despite another group claiming that it compromises the privacy that Apple so proudly promotes for its ecosystem of products and services. However, there are concerns that parental involvement is necessary as children below 13 years of age need guidance to handle such risky scenarios. Earlier this month, Facebook also introduced a reporting tool seeking to protect users from the menace of revenge porn.

So far, the system was supposed to mandatorily alert parents when children aged 12 or below opened an explicit image or tried to send it. Apple’s support document still has the same guidelines and hasn’t been updated yet. “For accounts of children age 12 and under, parents can set up parental notifications which will be sent if the child confirms and sends or views an image that has been determined to be sexually explicit,” it says. As for children between 13 and 17 years of age, parental alerts are optional. However, they will still be warned before opening problematic images they’ve received in Messages, and before sending such imagery.

Source: The Wall Street Journal, Apple